Based on some testing, I have several new pieces of information on this issue.

1. Performance limited by controller configuration.

First, I tracked down the underlying root cause of the performance problems I've been having. Two of my controller cards are RAIDCore PCI-X controllers, which I am using for 16x SATA connections. These have fantastic performance for physical disks

that are initialized with RAIDCore structures (so they can be used in arrays, or even as JBOD). They also support non-initialized disks in "Legacy" mode, which is what I've been using to pass-through the entire physical disk to SS. But for some reason, occasionally

(but not always) the performance on Server 2012 in Legacy mode is terrible - 8MB/sec read and write per disk. So this was not directly a SS issue.

So given my SS pools were built on top of disks, some of which were on the RAIDCore controllers in Legacy mode, on the prior configuration the performance of virtual disks was limited by some of the underlying disks having this poor performance. This may

also have caused the unresponsiveness the entire machine, if the Legacy mode operation had interrupt problems. So the first lesson is - check the entire physical disk stack, under the configuration you are using for SS first.

My solution is to use all RAIDCore-attached disks with the RAIDCore structures in place, and so the performance is more like 100MB/sec read and write per disk. The problems with this are (a) a limit of 8 arrays/JBOD groups to be presented to the OS (for

16 disks across two controllers, and (b) loss of a little capacity to RAIDCore structures.

However, the other advantage is the ability to group disks as JBOD or RAID0 before presenting them to SS, which provides better performance and efficiency due to limitations in SS.

Unfortunately, this goes against advice at http://social.technet.microsoft.com/wiki/contents/articles/11382.storage-spaces-frequently-asked-questions-faq.aspx,

which says "RAID adapters, if used, must be in non-RAID mode with all RAID functionality disabled.". But it seems necessary for performance, at least on RAIDCore controllers.

2. SS/Virtual disk performance guidelines. Based on testing different configurations, I have the following suggestions for parity virtual disks:

(a) Use disks in SS pools in multiples of 8 disks. SS has a maximum of 8 columns for parity virtual disks. But it will use all disks in the pool to create the virtual disk. So if you have 14 disks in the pool, it will use all 14

disks with a rotating parity, but still with 8 columns (1 parity slab per 7 data slabs). Then, and unexpectedly, the write performance of this is a little worse than if you were just to use 8 disks. Also, the efficiency of being able to fully use different

sized disks is much higher with multiples of 8 disks in the pool.

I have 32 underlying disks but a maximum of 28 disks available to the OS (due to the 8 array limit for RAIDCore). But my best configuration for performance and efficiency is when using 24 disks in the pool.

(b) Use disks as similar sized as possible in the SS pool.

This is about the efficiency of being able to use all the space available. SS can use different sized disks with reasonable efficiency, but it can't fully use the last hundred GB of the pool with 8 columns - if there are different sized disks and there

are not a multiple of 8 disks in the pool. You can create a second virtual disk with fewer columns to soak up this remaining space. However, my solution to this has been to put my smaller disks on the RAIDCore controller, and group them as RAID0 (for equal

sized) or JBOD (for different sized) before presenting them to SS.

It would be better if SS could do this itself rather than needing a RAID controller to do this. e.g. you have 6x 2TB and 4x 1TB disks in the pool. Right now, SS will stripe 8 columns across all 10 disks (for the first 10TB /8*7), then 8 columns across 6

disks (for the remaining 6TB /8*7). But it would be higher performance and a more efficient use of space to stripe 8 columns across 8 disk groups, configured as 6x 2TB and 2x (1TB + 1TB JBOD).

(c) For maximum performance, use Windows to stripe different virtual disks across different pools of 8 disks each.

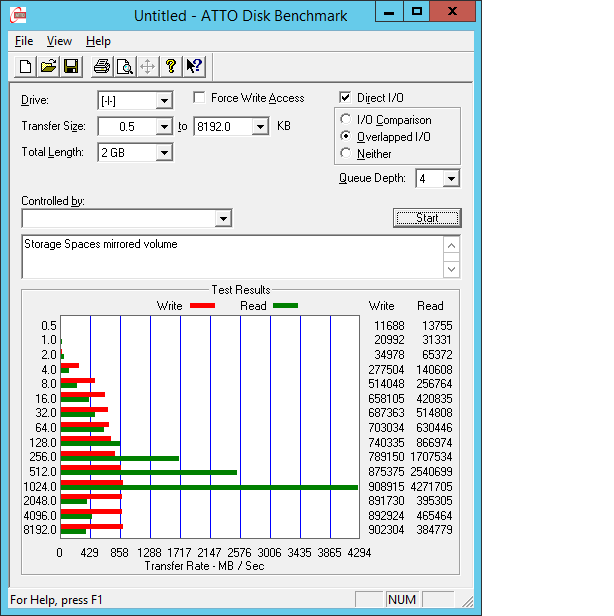

On my hardware, each SS parity virtual disk appears to be limited to 490MB/sec reads (70MB/sec/disk, up to 7 disks with 8 columns) and usually only 55MB/sec writes (regardless of the number of disks). If I use more disks - e.g. 16 disks, this limit is

still in place. But you can create two separate pools of 8 disks, create a virtual disk in each pool, and stripe them together in Disk Management. This then doubles the read and write performance to 980MB/sec read and 110MB/sec write.

It is a shame that SS does not parallelize the virtual disk access across multiple 8-column groups that are on different physical disks, and that you need work around this by striping virtual disks together. Effectively you are creating a RAID50 - a Windows

RAID0 of SS RAID5 disks. It would be better if SS could natively create and use a RAID50 for performance. There doesn't seem like any advantage not to do this, as with the 8 column limit SS is using 2/16 of the available disk space for parity anyhow.

You may pay a space efficiency penalty if you have unequal sized disks by going the striping route. SS's layout algorithm seems optimized for space efficiency, not performance. Though it would be even more efficient to have dynamic striping / variable column

width (like ZFS) on a single virtual disk, to fully be able to use the space at the end of the disks.

(d) Journal does not seem to add much performance. I tried a 14-disk configuration, both with and without dedicated journal disks. Read speed was unaffected (as expected), but write speed only increased slightly (from 48MB/sec to

54MB/sec). This was the same as what I got with a balanced 8-disk configuration. It may be that dedicated journal disks have more advantages under random writes. I am primarily interested in sequential read and write performance.

Also, the journal only seems to be used if it in on the pool before the virtual disk is created. It doesn't seem that journal disks are used for existing virtual disks if added to the pool after the virtual disk is created.

Final configuration

For my configuration, I have now configured my 32 underlying disks over 5 controllers (15 over 2x PCI-X RAIDCore BC4852, 13 over 2x PCIe Supermicro AOC-SASLP-MV8, and 4 over motherboard SATA), as 24 disks presented to Windows. Some are grouped on my RAIDCore

card to get as close as possible to 1TB disks, given various limitations. I am optimizing for space efficiency and sequential write speed, which are the effective limits for use as a network file share.

So I have: 5x 1TB, 5x (500GB+500GB RAID0), (640GB+250GB JBOD), (3x250GB RAID0), and 12x 500GB. This gets me 366MB/sec reads (note - for some reason, this is worse than the 490MB/sec when just using 8 of disks in a virtual disk) and 76MB/sec write (better

than 55MB/sec on a 8-disk group). On space efficiency, I'm able to use all but 29GB in the pool in a single 14,266GB parity virtual disk.

I hope these results are interesting and helpful to others!

-

Edited by

David Trounce

Friday, July 20, 2012 3:52 AM