Hello everyone,

I was assigned a work item wherein, I've a dataflow task on For Each Loop container at control flow of SSIS package. This For Each Loop container reads the CSV files from the specified location one by one, and populates a variable with current file name. Note, the tables where I would like to push the data from each CSV file are also having the same names as CSV file names.

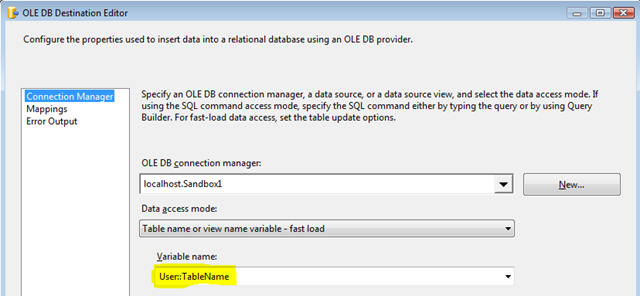

On the dataflow task, I've Flat File component as a source, this component uses the above variable to read the data of a particular file. Now, here my question comes, how can I move the data to destination, SQL table, using the same variable name?

I've tried to setup the OLE DB destination component dynamically but it executes well only for first time. It does not change the mappings as per the columns of the second CSV file. There're around 50 CSV files, each has different set off columns in it. These files needs to be migrated to SQL tables using the optimum way.

Does anybody know which is the best way to setup the Dataflow task for this requirement?

Also, I cannot use Bulk insert task here as we would like to keep a log of corrupted rows.

Any help would be much appreciated. It's very urgent.